In mathematics, the logarithm is the inverse function to exponentiation. That means the logarithm of a given number x is the exponent to which another fixed number, the base b, must be raised, to produce that number x.

Wikipedia

Introduction

If you want to see the original post and refresh your knowledge on Big O notation please click here.

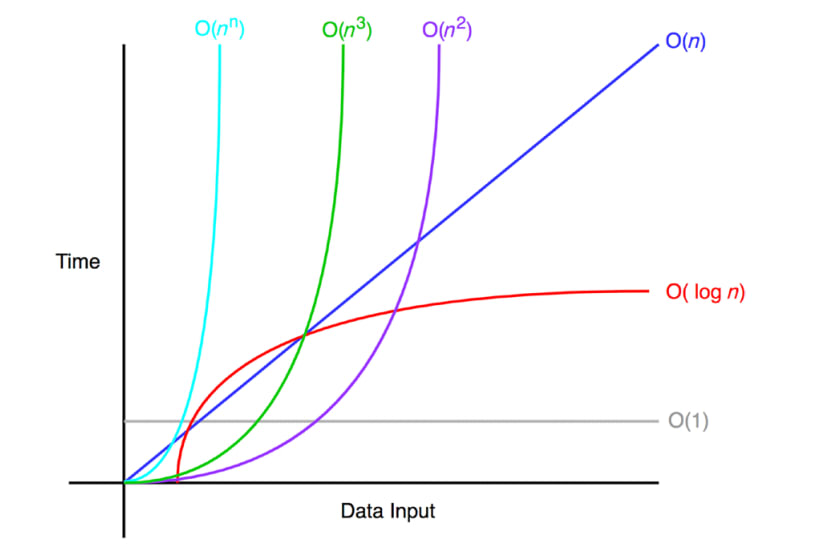

In the last part, we clarified and deciphered the Big O notation, which tells us how fast and performant our code is. We’ve seen and explored some examples of different notations such as O(1), O(n), and O(n2).

There is another common variation of Big O runtime. Take a look at the image below, in red we see the logarithmic time complexity. Wait, logn. What? Well, if you (like me) lost math at the exact same point letters were introduced, do not despair!

It might be obscure at first, but this post will try to hopefully make it clearer and easier to understand.

So lets start!

The logarithm

If we have any number b such that b > 0 and b ≠ 1, and we have x > 0 then we can say that the ‘log base b of x’ is y = logbx which is equivalent to by = x.

And that’s it! If you didn’t catch it at first, logarithms and exponents are just inverse functions.

y = logbx <=> by = x

B is the base, x is the argument, and y is the solutions to the equation.

In the case above y = logbx is called the logarithmic form and by = x is the exponential form.

Log (the combination of letters) doesn’t mean anything – it is just the name of the function that denotes the logarithmic operation. So if we see log anywhere in our mathematical problems, we will be sure that we are dealing with logarithms.

The parameter b being subscripted tells us the base of the operation, and x is the parameter.

We are just asking – what number do we need as the exponent to b in order to get x as the final result. An easy mnemonic method to remember in what correlation logarithms and exponents are (which I use often) is to imagine the b ‘growing’ and the x always ‘running right from the equal sign’ so that the equations fit.

Let us run through a couple of examples to walk one through on how we could solve issues like these.

What would be the result of log416?

Getting the solution straight ahead might be hard. The best way to determine the solution would be to convert the formula into exponential form. Finding log416 immediately might seem daunting but knowing that log416 is the same as 4? = 16 suddenly seems a lot easier! What exponent are we looking for here so that 4 multiplied by itself gives 16? The solution is of course 42 = 16, so the solution of log416 is 2.

What is the result of log6216?

Again we convert the logarithmic form into exponential. log6216 is equal to 6? = 216. What exponent do we need here? 6 multipled by itself is 36. So it is not 2, we need a bigger exponent! We should try it one more time – 36 * 6 = 216! We have found our exponent. It is three, so the solution of log6216 is 3.

What is the result of log\frac{1}{3}81?

Now, this one is equal to (\frac{1}{3})? = 81. Lets do the calculations (\frac{1}{3})-4 = 34 = 81. So now we have that log\frac{1}{3}81 = -4.

For more information about logarithms you can check out this page. We touched on the subject enough to properly understand logarithmic time complexity, but you can take a look at the link above for more exercises, more properties of logarithms, and some special logarithmical types!

Logarithmic Time Complexity

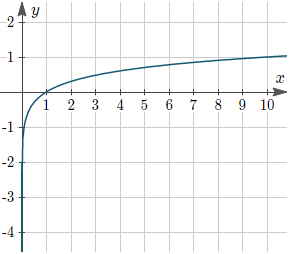

The image below shows us how a logarithm scales:

Quite efficient! Even if we increase the size of the inputs, the value will start to converge at a certain point, so it is not a wonder that after the constant complexity, the logarithmic time complexity is the most efficient one!

Logarithmic time complexity most commonly occurs when a paradigm known as Divide and Conquer is involved. Divide and Conquer is a programming paradigm that breaks the issue into smaller subproblems. Each subproblem is small enough that it can be solvable, and finally, after the computations are done the subproblems are combined. Some of the standard algorithms that use Divide and Conquer are Binary Search, Quicksort, and Merge Sort.

The diagram below shows the merge sort algorithm, the array input is divided, first into two arrays, then into four, and it will keep on dividing itself until it becomes small enough that the individual items can be sorted. Afterwards, the now separated chunks get combined together to get the final result – a sorted array.

Logarithmic Time Complexity Example

O(LOG(N))

Just to make something clear here. You probably noticed that we don’t have a subscripted value when the logarithmic notation is involved. We just write O(logn).

In the examples above we had to write the base, because we solved different mathematical variations of logarithms. If there is no base specified then the base value by default is 2. So log100 would be equal to log2100.

Now let us look at an interesting problem.

function binarySearch(array, key) {

var low = 0;

var high = array.length - 1;

var mid;

var element;

while (low <= high) {

mid = Math.floor((low + high)/ 2)

element = array[mid]

if (element < key) {

low = mid + 1;

} else if (element > key) {

high = mid - 1;

} else {

return mid;

}

}

return -1

}

binarySearch([1, 2, 4, 6, 7, 9, 13, 14, 15, 16, 18, 22, 25, 26], 9); // 5

BinarySearch method takes two arguments, an array, and some key that is supposed to be in the array.

- We define initial variables. Low is by default zero pointing to the first element in the array, high will be the length of the array minus one (pointing to the last element in the array), and we also declare but not yet define mid and the element variables.

- For as long as the low value is smaller or equal than the high value, we will run the while loop.

- We find the middle index (by adding and dividing the current low and high values), and set the current element to the value found on that index inside the array. If the element is less than the key we’re looking for, we redeclare the low value, if the element is bigger than the key, we redeclare the high value, and we go back to step 2.

- Checking for the exact value of the element. If the element is neither bigger nor lower than the key value, then we’ve found our element!

- If we went through the loop without returning the key value, then that value didn’t even exist in the array in the first place! We return just -1.

The algorithm is visually represented below with the input provided in the code snippet.

Our search value is 9.

First our middle value is 13 and 9 is less than 13, so we ignore all the values larger than 13!

Our next middle value is 4, which is lower than 9 so we ignore all the values less or equal than 4.

Our next middle value is 7, which is again lower than 9 so we ignore all the values less or equal than 7.

Our final value is 9, and that is the number we are looking for!

This solution ‘divides’ the time required to get to the result by two. Or, in other words if we would double our input, we would only need one additional step in order to get to the solution.

Conclusion

We have shown that the logarithmic notation isn’t that intimidating and that we can easily grasp the logic behind it with only a little bit of mathematics. An algorithm running this complexity is quite an efficient one, but sometimes often this complexity isn’t applicable to the algorithm at hand.

If you want to check out more articles – click here.