Big O notation is a mathematical notation that describes the limiting behavior of a function when the argument tends towards a particular value or infinity.

Wikipedia

Introduction

Even if you’ll never use Big O in your professional career, it is still important to know what it is. And it can be valuable grasping the concept – knowing how to classify certain algorithms based on the changes in input size comes in handy during technical interviews, coding challenges or huge projects where performance matters greatly.

Usually when working on a problem, the developer follows a single principle whilst looking for an implementation of a certain algorithm among multiple versions. I NEED THIS TO WORK.

If the first solution works. Amazing. Let’s use that one.

If not, see what the hell is happening, change some stuff until it works, and pick up the second solution, or the third… or any that comes after that. Anything, as long as it works.

‘It works’ sometimes isn’t good enough

But what if we want to go a bit deeper, more into detail. What if we want to compare those multiple implementations (those who work) and to classify them based on different characteristics. What if we want to identify inefficiencies in our solutions, or discuss trade-offs between different variations. How do we even define ‘better’ when it comes to code? Do we consider better solutions faster ones, or those who use less resources, or those who are more readable?

Big O notation is a way to describe the relationship between the inputs of the algorithm and the time needed to implement that algorithm. In short, based on the size of the input, Big O notation tells us how fast the algorithm will run.

The definition

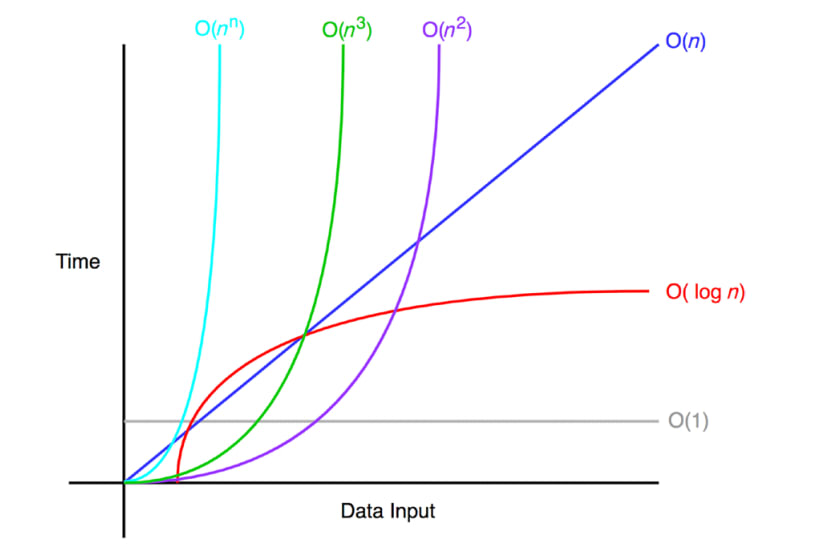

Big O notation describes the performance or the complexity of an algorithm. It does this by counting the number of operations inside the algorithm, based on the input. Still seems baffling, right? Well, look at the image below. If we have a certain input n, and if we increase the size of that input, the runtime of different algorithms will be different.

If you’re scratching your head even more than when you just started reading this article, look at the following example. Let’s solve this interview question.

Write a function

sumTo(n)that calculates the sum of numbers1 + 2 + ... + n.

What comes to mind first? The usual solution using the for loop. We will iterate through the numbers from 1 to n and then sum them up as they come along.

function sumTo(n) {

let sum = 0;Using the for loop w

for (let i = 1; i <= n; i++) {

sum += i;

}

return sum;

}

console.log(sumTo(5)) // 1 + 2 + 3 + 4 + 5 = 15

n (the input) is important here, because, as we increase n, JavaScript has to create one more operation due to the for loop (JavaScript needs to add another number to the sum).

So, if we sent 3 as the parameter, the for loop would run three times, and do the addition three times. If 5 was the parameter, the addition would be called 5 times, if 100 was the parameter it would be called 100 times, if 1000… you get the point.

This solution is dependent on the parameter n, and the number of operations grow as n grows. We can say that the runtime of this algorithm is directly correlated to the input – O(n).

Now take a look at another solution of the same problem:

function sumTo(n) {

return n * (n + 1) / 2;

}

console.log(sumTo(5)); // 5 * (5 + 1) / 2 = 30 / 2 = 15

If you want to read about finite and infinite sums and why this formula works, please check this link, and you can read about the proof here. Basically, the formula n * (n + 1) / 2 will find the sum of all numbers between 1 and n. How many operations do we have here? Well, we have the multiply, then the addition and lastly the division, so no matter what number we input we will always have 3 operations (3 is a constant, it is considered insignificant so we can reduce it to 1). So, this algorithm runs at a constant speed. No matter what the input, the time to run this function will always remain the same – O(1).

Big O examples

O(1)

An algorithm is running at O(1) if the result is not dependent on an input variable or if inputs don’t affect how many operations need to be performed. O(1) usually outperforms all of the other notations. You saw one example up there, and here are a couple more:

function sumNumbers(n1, n2) {

console.log(n1 + n2)

}

function printMultiple(n) {

for (let i = 0; i < 10; i++) {

console.log(i * n)

}

}

var arr = [ 1,2,3,4,5];

arr[2]; // => 3

The first function (sumNumbers) has two values as inputs (n1 and n2), and they are not affecting the number of operations (there is only one operation in the body of the function – addition), so the runtime isn’t dependent on the inputs. It always runs at a constant time – O(1).

The second function (printMultiple) seems interesting, we do have a foor loop, and we saw that a loop is correlated to a higher runtime. Well, not in this case because the loop only runs 10 times, so again, regardless of n only ten multiples will be printed (we could say that we have 10 operations, but we will see that we can simplify Big O expressions, so the final runtime will be again O(1)).

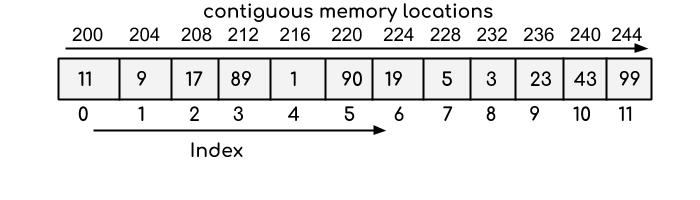

The third example is also running in a constant time. Why is accessing arrays always constant? Because of the way arrays are structured, elements of an array are located in a continuous memory sequence, so any item of the array is easily computed by using the formula – memory_address + item_size * index, only requiring one operation.

O(n)

O(n) describes an algorithm whose performance grows linearly and in direct proportion to the size of the input. The time complexity increases as the size increases and they increase at the same rate. Some examples are below:

function loggingNumbers(n) {

for (let i = 1; i <= n; i++) {

console.log(n)

}

}

function loggingNumbers2(n) {

for (let i = 0; i < n; i++) {

console.log(n)

}

for (let i = 0; i < n; i++) {

console.log(n)

}

}

The first loggingNumbers method takes an argument, and the for loop is dependent on that argument, meaning that it controlls how many iterations the loop will run. If we send 5, we will output numbers from 1 to 5, if we send 10 from 1 to 10, and so on, so the output is correlated to the input and it means that this method grows linearly – O(n).

The second loggingNumbers2 method is interesting, it has two for loops running one after another (they are not nested). So, one runs at n, another one also, so the final runtime is O(2n). Or is it? Again, we can simplify the expression, remove the constant, and we would only get O(n) as the runtime.

O(n2)

function multiplicationTable(n) {

for (let i = 0; i <= n; i++) {

for (let j = 0; j <= n; j++) {

console.log(i * j);

}

}

}

What is happening here? The multiplicationTable method has two for loops, and each is iterating over n, meaning that in total we get n * n iterations. The runtime will be O(n2). It’s worth to note here that the runtime of nested loops is equal to the amount of loops being nested, if we had three of them the runtime would be O(n3) and so on. Additionally, check the visualization graph above to see the rate at which the curve appears if the runtime is quadratic or cubic, which means that as we increase the input, the runtime increases at an even faster rate, making the algorithm not very efficient.

Simplifying Big O expressions

As seen above insideloggingNumbers2 method we have removed the 2 from the solution.

Why?

Let’s us look at this mathematically. If n keeps increasing to infinity, the constant 2 isn’t changing the growth rate of the algorithm, the linear function is still growing linearly. This doesn’t mean that the constants are irrelevant or not meaningful. It just means that the Big O notation doesn’t care about constants because Big O only describes the growth rate in terms of mathematical functions, not their actual runtime.

The two biggest rules here are that constants don’t matter (O(2n) becomes O(n), and O(10) becomes O(10 * 1)) and that smaller terms don’t matter (O(n + 10) becomes O(n), keep in mind, the 10 isn’t affecting the growth rate by much, if we keep increasing the n to infinity).

Conclusion

We’ve defined the Big O notation, shown some of the most common examples and explained why and how we simplify . In the next part we’ll go through the logarithmic runtime, which is more efficient than linear (O(n)) but less efficient than constant (O(1)).

If you want to check out more articles – click here.